|

Neil Nie I am an incoming CS Ph.D. student at UC Berkeley. Currently, I'm a Masters student in computer science at Stanford University where I research robot learning, foundation models, and computer vision advised by Professor Jiajun Wu. I graduated from Columbia University with a Bachelors in computer science. At Columbia, I was very fortunate to be advised by Professor Shuran Song, working on robot learning and interactive perception. I have interned at Apple multiple times, mainly working on computer vision and multi-modal sensing. I've contributed to health and fitness features, gaze and gesture on Apple Vision Pro, and novel view synthesis / neural rendering on Apple Vision Pro. |

|

|

|

| Nov 2024 | BLADE is accepted at CoRL 2024, and is selected for oral presentation at the LEAP Workshop. | |

| Jun 2024 | Return to Apple as a CV/ML scientist intern, working on 4D gaussian splatting. | |

| Sep 2023 | Start pursuing a MS in CS at Stanford. Join SVL as a graduate researcher. | |

| Jun 2023 | Return to Apple as a CV/ML algorithms intern, working on Apple Vision Pro. | |

| May 2023 | Graduated from Columbia University with a B.S. in computer science. | |

| Apr 2023 | SfA is accepted at IROS 2023. | Sep 2019 | Our work on memristor arrays is published in Nature Machine Intelligence. |

| Jan 2017 | Invited Talk @ TEDxDeerfield "Understanding AI and Its Future" [YouTube] |

|

|

Making Tea

Washing Dishes with Humans

Wiping the Countertop

|

I'm broadly interested in robot learning, with the long-term goal of building generalists robots for the household. Specifically, my research focuses on 1) applying foundation models to robotics, 2) learning from human demonstrations. |

|

Learning Compositional Behaviors from Demonstration and Language

Weiyu Liu *, Neil Nie *, Ruohan Zhang, Jiayuan Mao†, Jiajun Wu†, CoRL, 2024 (Oral Presentation, LEAP Workshop) project page / arXiv BLADE is a framework for long-horizon robotic manipulation by integrating imitation learning and model-based planning. BLADE leverages language-annotated demonstrations, extracts abstract action knowledge from large language models (LLMs), and constructs a library of structured, high-level action representations. |

|

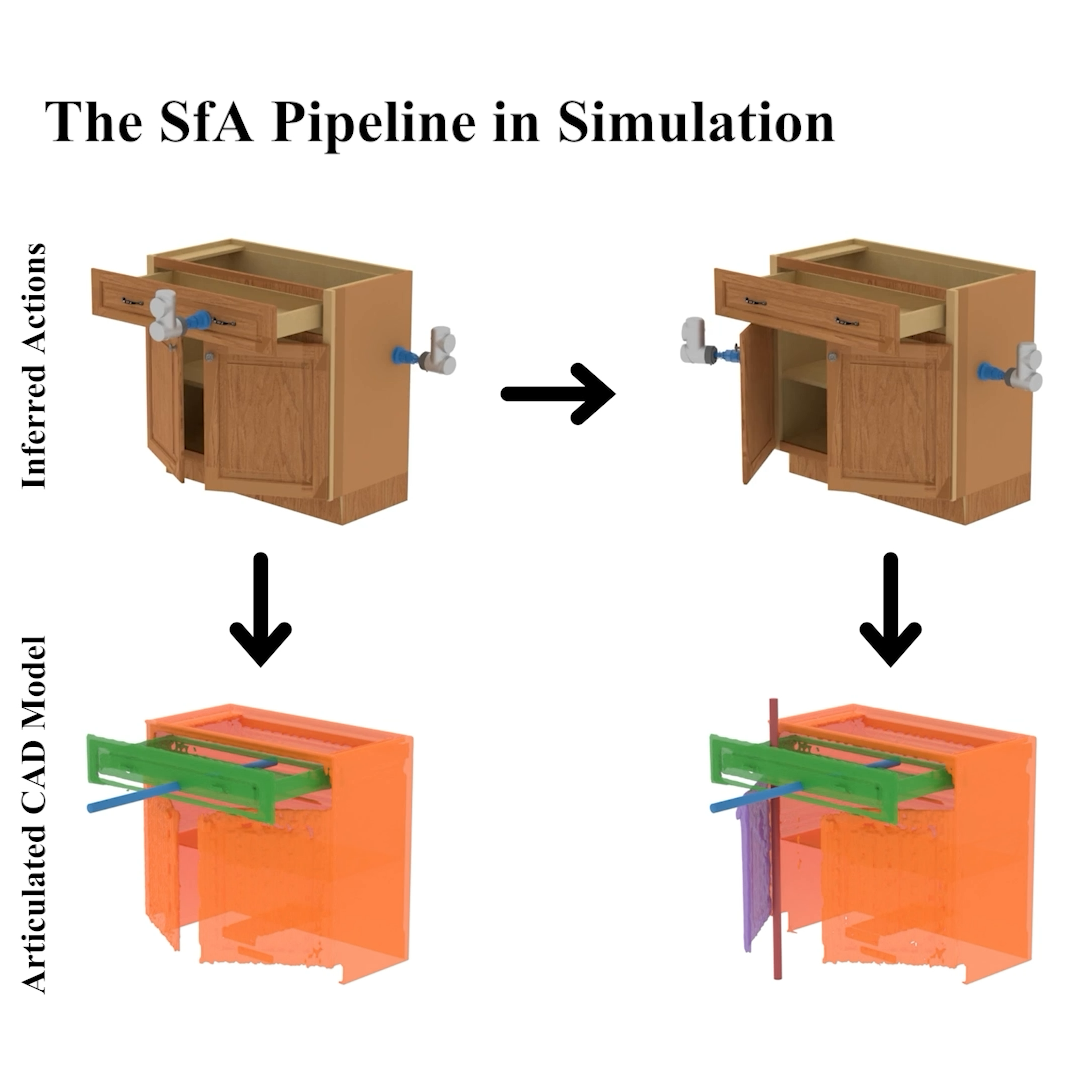

Structure From Action:

Learning Interactions for Articulated Object 3D Structure Discovery

Neil Nie, Samir Yitzhak Gadre, Kiana Ehsani, Shuran Song, IROS, 2023 project page / arXiv SfA is a framework to discover 3D part geometry and joint parameters of unseen articulated objects via a sequence of inferred interactions. We show that 3D interaction and perception should be considered in conjunction to construct 3D articulated CAD models. |

|

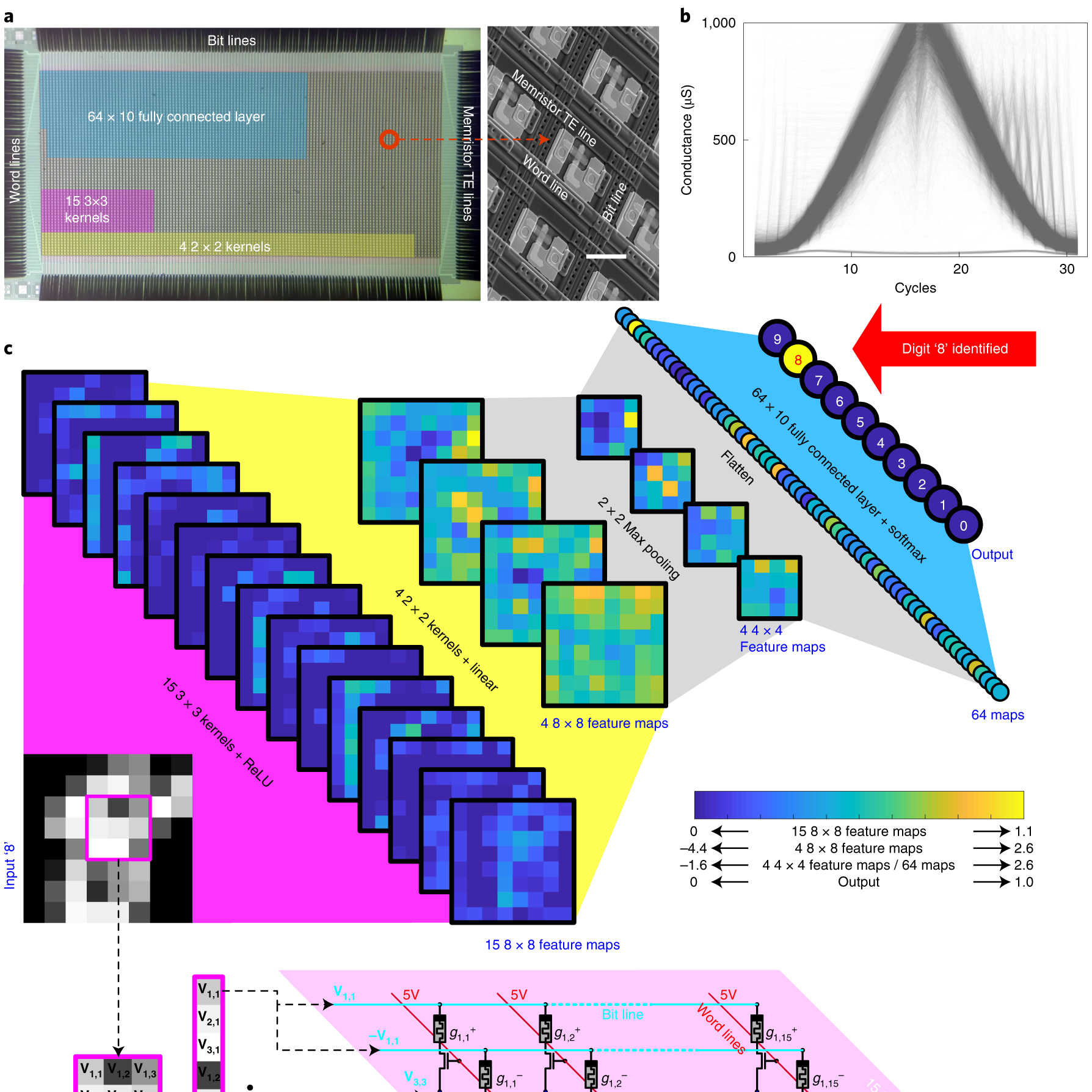

In Situ Training of Feed-Forward and Recurrent Convolutional Memristor

Networks

Zhongrui Wang, Can Li, Peng Lin, Mingyi Rao, Yongyang Nie, Wenhao Song, Qinru Qiu, Yunning Li, Peng Yan, John Paul Strachan, Ning Ge, Nathan McDonald, Qing Wu, Miao Hu, Huaqiang Wu, R Stanley Williams, Qiangfei Xia, J Joshua Yang Nature Machine Intelligence, 2019 Paper Link This work demonstrates energy-efficient, memristor-based convolutional networks that achieve high accuracy using weight-sharing techniques and reduced parameters, highlighting potential for future edge AI. |

|

|

|

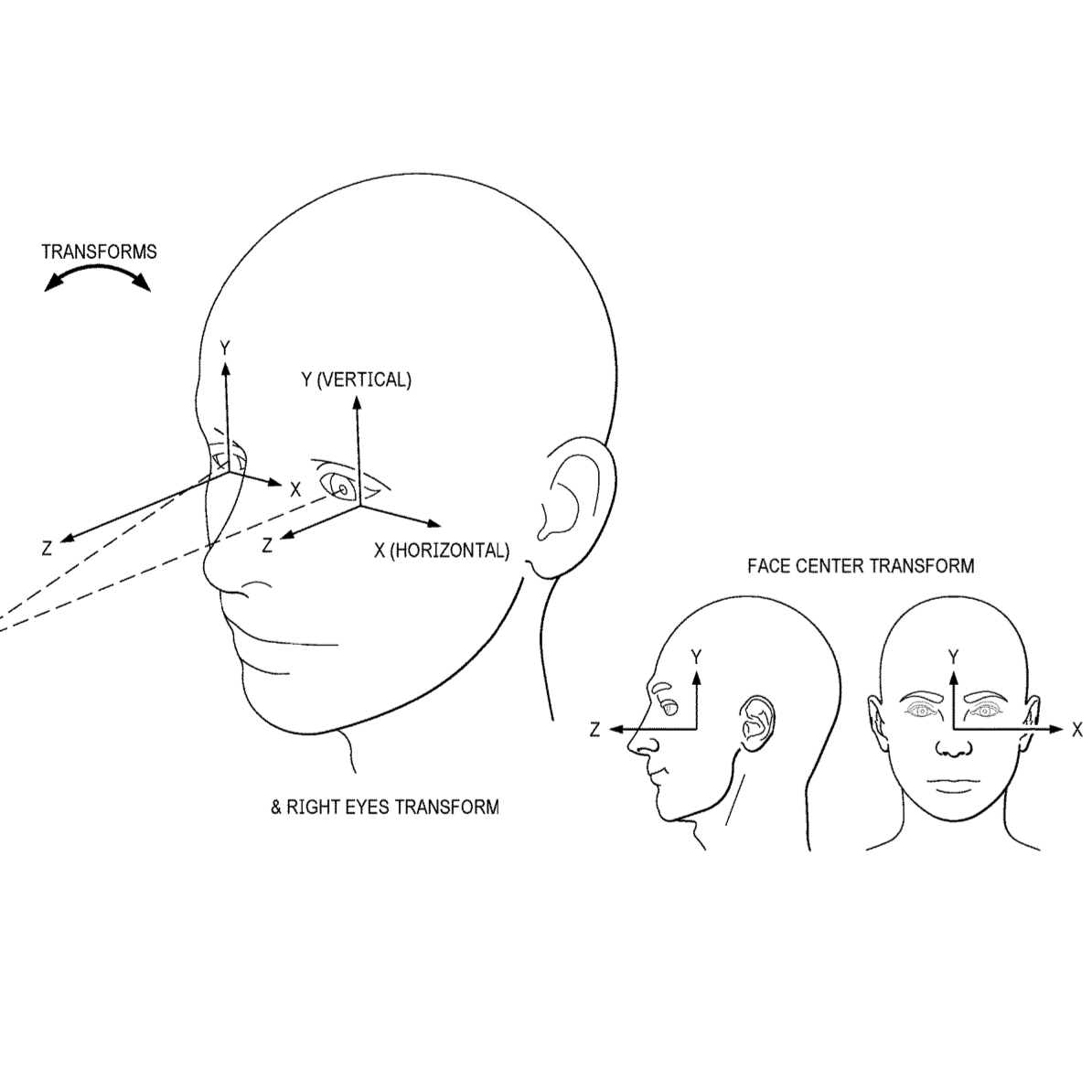

Tracking Caloric Expenditure Using a Camera

Aditya Sarathy, James P. Ochs, Yongyang Nie Apple Inc. | US Patent US20240041354A1 | Filed 2023 | Patent link The embodiments describe a method for tracking caloric expenditure using a camera by analyzing face tracking data, motion sensor data, and environmental factors to estimate energy expenditure. |

|

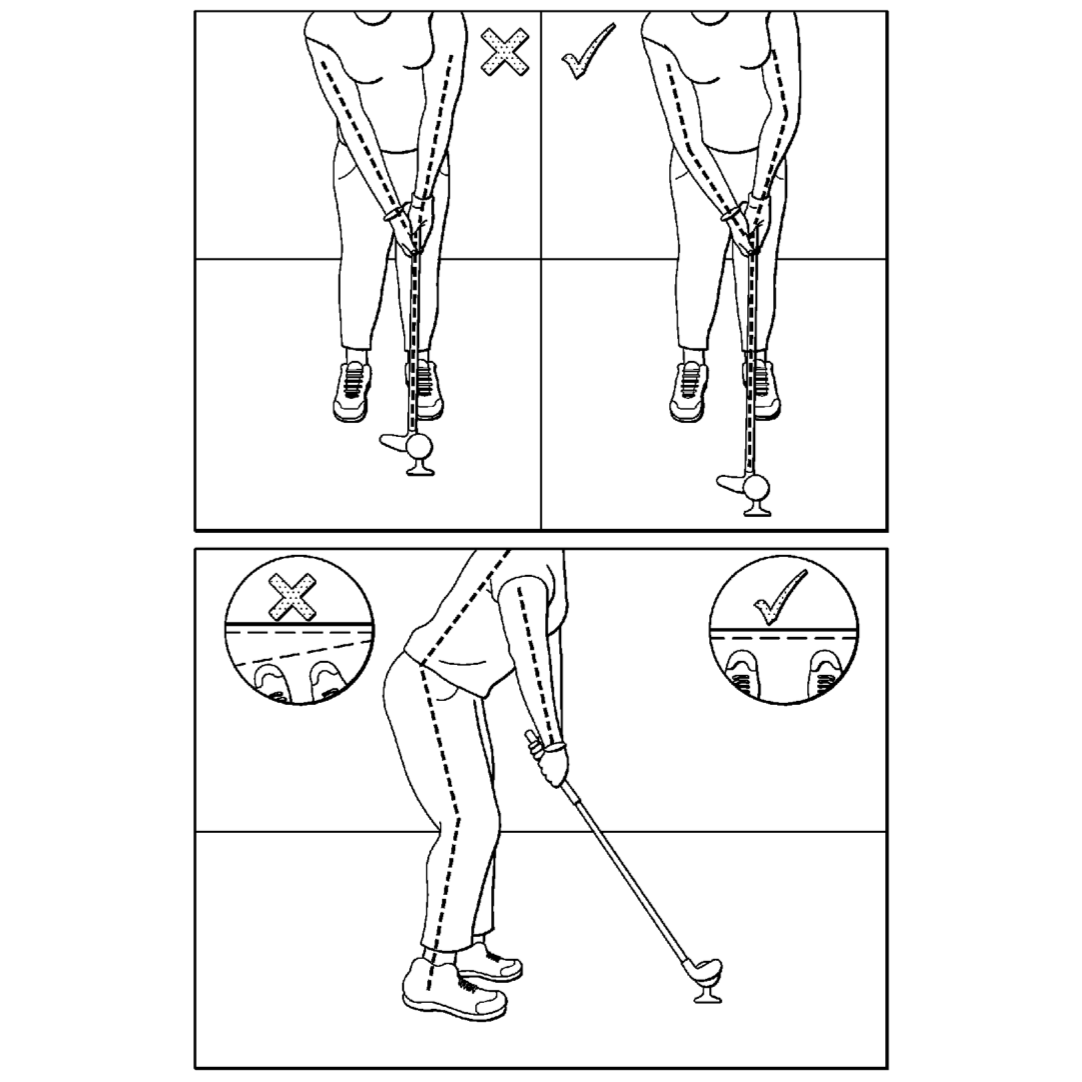

Posture and Motion Monitoring Using Mobile Devices

Aditya Sarathy, Rene Aguirre Ramos, Umamahesh Srinivas, Yongyang Nie Apple Inc. | US Patent US20230096949A1 | Filed 2022 | Patent link The embodiments describe a method for monitoring posture and motion using mobile devices by combining motion sensor data and skeletal data to estimate and classify a user's body pose through machine learning. |

Teachings |

|

Thank you for Visiting! Last Updated: 11/25/2024 Website code borrowed from Jon Barron. (c) Neil Nie, 2024, All Rights Reserved. |